The Fine Line Between Personalization and Manipulation

AI is reshaping the way we experience online shopping. From personalized recommendations to dynamic pricing and adaptive interfaces, platforms are becoming smarter at anticipating what users want—often before users even know it themselves.

The benefits are clear: higher engagement, better conversion rates, and more efficient digital journeys.

But there’s a catch.

As AI gets better at predicting behavior, users can begin to feel like they’re being manipulated rather than helped. In that case, personalization can do more harm than good.

How Can Personalization Backfire?

Not all personalization works. It’s not just about what you personalize, but how you deliver it.

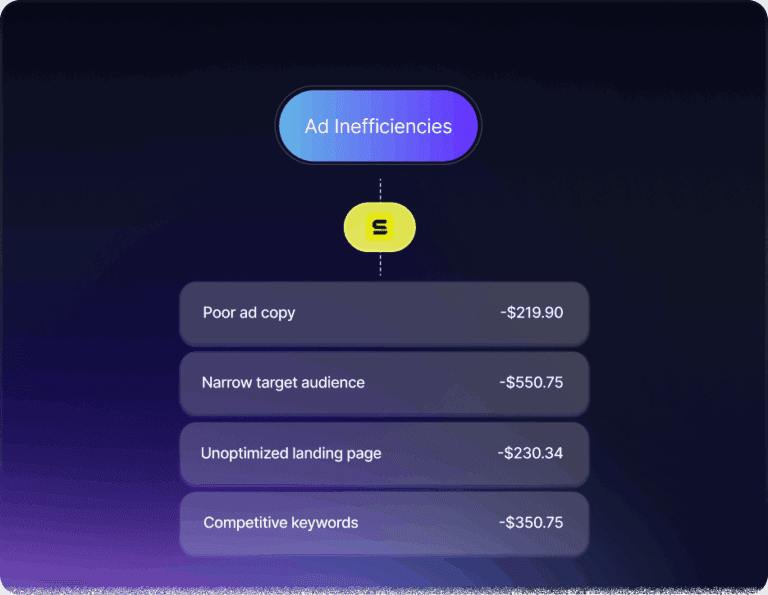

When users are shown suggestions or pricing changes without any context, they’re more likely to feel manipulated than helped. And once trust erodes, the effectiveness of those suggestions drops dramatically.

This effect is tied to a behavioral science principle known as the transparency heuristic. Put simply, people are more likely to trust systems they understand. When AI explains why it’s making a recommendation, users feel respected and in control. When it doesn’t, they may feel watched, misunderstood, or even deceived.

There’s also the issue of reactance—a psychological response where people resist being influenced if they feel their freedom is being limited. Even small design choices can unintentionally trigger this. For example, an unexplained countdown timer might drive urgency—or it might make someone abandon their cart because it feels like a pressure tactic.

Designing AI Experiences That Respect Users

If you’re using AI to improve your user journey, you’re moving in the right direction. But if your system lacks transparency or doesn’t give users a sense of control, it might be doing more harm than good.

Here’s how to design personalization that works with human psychology, not against it:

1. Add Simple Explanations to Personalization

A short, contextual label can transform how a recommendation is received. Instead of a mysterious suggestion, it becomes a meaningful one.

Examples:

- “Recommended because you viewed X”

- “Popular with users near you”

- “Based on your recent activity”

These explanations serve as justification cues—small nudges that clarify intent and increase perceived relevance, without triggering concern.

2. Make Dynamic Pricing and Scarcity Understandable

Urgency tactics like time-limited offers or fluctuating prices are effective—but only when users perceive them as fair.

Instead of just showing a countdown or changing price, add:

- “This price reflects current demand in your area”

- “Available for the next 20 minutes due to limited inventory”

This taps into the behavioral importance of procedural fairness—the idea that people are more accepting of outcomes if the process behind them feels reasonable and transparent.

3. Give Users a Sense of Control

Offering even basic customization options helps reduce resistance and improve satisfaction.

Consider letting users:

- Hide irrelevant recommendations

- Indicate “Not interested”

- Choose to “Show me more like this”

These micro-interactions restore autonomy, one of the core psychological needs behind user engagement. People don’t mind being guided—as long as they feel they have a say in the process.

The Bottom Line: AI Personalization Should Feel Respectful, Not Controlling

Personalization is powerful—but with that power comes responsibility.

The most effective AI systems aren’t just accurate; they’re transparent, fair, and user-aware. When personalization feels like a collaboration instead of a black box, users are more likely to trust it—and act on it.

In a digital world where trust is increasingly hard to earn, thoughtful design grounded in behavioral science can offer a real competitive edge.

Source: Harvard Business Review

https://hbr.org/2024/11/personalization-done-right